|

Putting multicore

processing in context: Part One

Today’s embedded devices are increasingly based on multi-core designs.

Systems-on-a-chip (SoCs) often contain two or more processor cores in

homogeneous or heterogeneous combinations, and FPGA-based designs can include a

virtually unlimited number and variety of cores. An asymmetric multiprocessing

(AMP)-based RTOS is one approach to utilizing multi-core processors; symmetric

multiprocessing (SMP) is another. But from a historical perspective, multiprocessor/multicore designs have been

around almost as long as the computer in general. I believe that Burroughs

introduced the first commercially available one back in 1962; many more have

followed. Most of them had very specific purposes in mind. Many of the early ones were used in data centers, and for scientific

applications. You can also make a case for them being in the early desktops of

the late 80s with the introduction of the math co-processor and device

controllers such as an Ethernet controller. The question is: why are embedded device manufacturers becoming so enamored

with multi-core devices now? One reason is that embedded devices have more and

more tasks being heaped on them. My first cell phone was a StarTek. It had a rudimentary LCD display and

frankly was not very useful for anything other than making a phone call. It had

a 24-hour standby time and maybe an hour talk time once the battery was broken

in. That was not a real problem though since my first plan was for 30 minutes a

month. But look at today’s phones and the demands made on them: built-in cameras,

video capabilities, nice graphical interfaces for browsing the Web, calendars

and all sorts of games. It is no wonder that designers are looking at ways to

get more performance, and better form factor while extending battery life. Why multicores? Adam Smith's

answer Smith suggested that a pin factory that had adopted such a "specialization of

labor" might produce tens of thousands of pins a day whereas a pin factory in

which each worker attempted to produce pins, from start to finish, would produce

very few pins. Heterogeneous multicore designs such as the OMAP processor, which employ both

a DSP and an ARM core, embody Adam Smith’s concepts of both division of labor

and a specialization of labor. This approach is a Since power consumption is both a function of voltage and frequency (power

consumption increases proportionally with frequency and power consumption

increases to the square of voltage increase, the division of labor aspect of

multiprocessing is of particular interest to manufacturers of portable devices.

Most portable electronic devices do not operate at peak capacity of their

processors all the time. However, to handle peak demand, manufacturers have to

provide a processor that can deliver the processing power to meet peak demands.

While the processor can be throttled down between periods of peak performance,

this may not be an option for devices that require operation in an intermediate

performance range. For most processors they must either run at full speed or be

in an idle state. This is where adding a number of slower processors may be appealing to device

manufacturers. If the issue of peak performance can be segmented so that

multiple processors can address peak performance needs, they can then be

throttled down or put to sleep during periods of inactivity. For periods where

performance demands are low, one or two of the low performance processors can

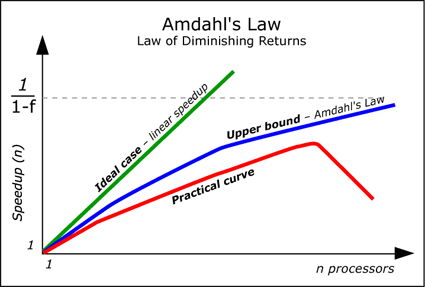

provide satisfactory processing power. The limits on your multicore design are essentially the same as the

constraints on classical multiprocessing systems: Amdahl’s law; serial code

execution; code execution granularity; load-imbalance; synchronization; and

cache coherency. Illustrated in Figure 1 above,

Amdahl’s Law states, “The non-parallel fraction of the code (i.e., overhead)

imposes the upper limit on the scalability of the code”. Thus, the maximum

parallel Speedup S(p) for a program that has a parallel fraction f: S(p) < 1/(1-f) The non-parallel (serial) fraction of the program includes the communication

and synchronization overhead. So according to Amdahl, if you have a program or a suite of tasks that is

comprised of say 50 percent serial code, no matter how many processors you add,

you will only get a performance increase of 200 percent. On the other hand, if

your code contains no serial code, like four tasks that have no communication

requirements, no shared resources, etc, then you can achieve a 1000 percent

speed up by going from one processor to 10. Serial code execution Spinlocks. One prime example

of how code becomes serialized is when there is the potential for processes

running on different processors to attempt to gain access to a resource that is

already in use by another process (Figure 2,

below). OS developers use what is called a Spinlock to act as a gatekeeper for a

resource. A Spinlock (sometimes called a Spinlock Mutex) is a special data

structure used in multiprocessing. It is similar to a Mutex or a semaphore in

that it protects access to resources that cannot be accessed by more than one

process at a time. The primary difference between a Spinlock and a Mutex is that

the Spinlock depends on a hardware ”test and set” mechanism that arbitrates

between two or more processes attempting access at the same time. When a process attempts to access a resource that is in use by another

process, the “blocked” process(s) will “spin” until the resource becomes

available; hence the name Spinlock. In essence, the processes queue up until

they can get access to the resource. There are different algorithms that can be

used to assign priority to processes waiting in a Spinlock. Regardless of the

algorithm used, once a process gets access to a resource, they keep it until

they decide to give it up. The granularity of Spinlocks and the resources that they are used to protect

has dramatic impact on the degree to which application code is serialized.

Devices such as hard drives and other IO devices come to mind when I think of

shared resources but, depending on the type of OS, kernel objects can also be

protected by Spinlocks. The granularity of a Spinlock is a function of the

number and type of resources that they are used to protect. A coarse-grained implementation of a Spinlock would be used to protect all IO

devices. So, for example, once a process gains access to any IO device, even a

process that needs access to an unused resource will spin in the Spinlock until

the lock is released. The other extreme would be to have a Spinlock protecting

each shared resource in the system. Each approach has its advantages. A coarse-grained approach is simple to

implement, but has the potential to serialize the application. The fine-grained

approach makes more resources available in parallel, but is more complicated and

has the potential to exhibit problems such as deadlock and other classical

problems associated with resource protection schemes used in single processor

systems. This problem can be overcome to a large degree through the use of

watchdog applications and other types of heuristics. However, it does add to the

overall overhead of the system, so it comes at a cost. One final note: Spinlocks don’t just protect system data or resources, they

can also be applied to application data. For example, if some device

configuration data consists of a string of bytes, it will need to be locked

during update to ensure data integrity. Granularity of Parallel Code

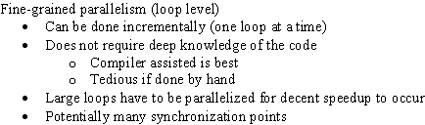

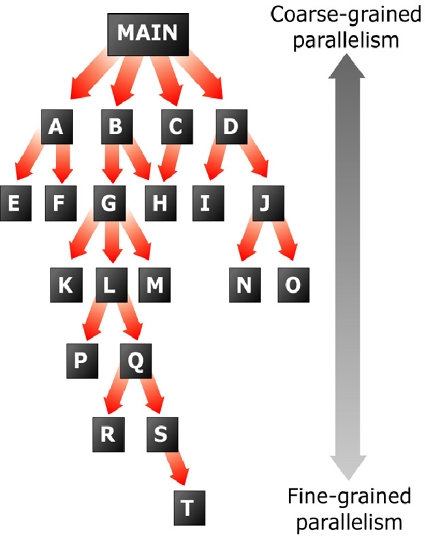

Execution Fine-grained architectures (Table 1,

above) have between two and a couple of dozen processors. An example of a

massively parallel problem approach would be if I want to add 2048 numbers

together and had 1024 processors to do it with. In the first iteration, all 1024

processors each add two numbers together. The second iteration of 512 processors

adds the 1024 numbers together and so on. It would only take 11 iterations to

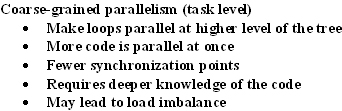

add all 2048 numbers together in this fashion. Course-grained programming (Table 2, above

and Figure 3 below), on the other hand, approaches the problem at a level

we are all more familiar with; segmenting our programs at the task level. Legacy

code can be easily converted to run on multi-core architectures using the

course-grained approach. It is usually a good fit for engineering departments as teams are usually

tasked at the application level. A good understanding of how the overall system

works is necessary so that the different tasks are prioritized so that the

execution is ordered in a way that optimizes performance. The down side of

course-grained parallelism is that it may lead to load imbalance. There are advantages and disadvantages to both approaches. The fine-grained

approach brings massive amounts of processing power to bear on a problem. It

also has the potential to utilize the processors more equally in a load

balancing system. That being said, other than data plane applications there are

very few control plane applications in the embedded space that could be easily

implemented that would benefit from massive parallelism. Furthermore

fine-grained segmentation is almost impossible to do without the use of a

compiler that is explicitly designed to exploit massive parallelism. On the other hand, if one has a compiler that is designed to exploit

fine-grained parallelism, or you have hand written the code to exploit a large

number of processors, then it is easy for all the processors to be utilized

efficiently. Another disadvantage in exploiting fine-grained parallelism is that

there is a large overhead to pay in terms of synchronizing and communication

overhead. Synchronization Overhead In the sum of 2048 numbers example used above, delays incurred due to memory

latency, interrupt or other factors cause the execution times to vary between

the different processing elements. As a result of the different execution times,

tasks that are dependent on these intermediate results essentially stall until

the result is ready. As the parallelism of an application increases, the

potential for synchronism overhead also increases. In general, there is a point

for almost all problem domains where the addition of additional processing

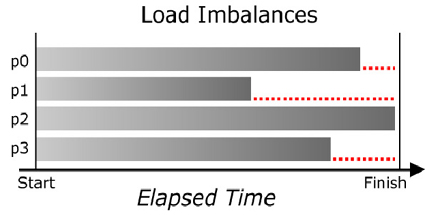

elements will cause a slow down in performance. Load Imbalance As shown in Figure 4 below, if there are a discrete number of tasks that need

to be performed and the execution times are unequal, some cores will be idle

while others are still executing. Cache Coherency in uniprocessor

designs Main memory on the other hand typically takes two or more cycles to access.

When a main memory fetch occurs, the process stalls. This is avoided by keeping

anticipated or frequently used data and instructions in cache. The processor

will only stall when the information it needs is not in cache. One side effect of using this two-layer approach to memory access is that if

cache is written to, then cache and main memory no longer contain the same

information. When this occurs, cache and main memory are considered incoherent.

There are different approaches that can address this problem. The two most

common are “write through” and “write back.” The simplest cache coherency mechanism is “write through.” When writing to

cache using “write through” each time the cache is written to, it also writes

through to main memory. Thus coherency is maintained. The down side is that the

processor stalls while the write through completes. A more advanced implementation for maintaining cache coherency tries to

address stalling during a write by buffering up writes so that they occur less

frequently. When the buffered writes are written to memory, they are written in

“burst mode.” This technique is called “write back.” The write back implementation for obvious reasons is more complicated than

write through. The write back implementation requires that a list of “dirty”

cache lines be maintained. The dirty entries indicate cache lines whose contents

are not the same as in main memory. When the cache loads a cache line from main memory, its contents are marked

as clean. When the processor writes to a cache location, an entry is made to the

cache list to indicate that the cache entry is dirty. Later, depending on when

the “caching algorithm” decides, the dirty cache entries are written to main

memory. Both write through and write back schemes can be implemented either in

hardware or in software. All modern commercial microprocessors I am aware of use

hardware to enforce cache coherency. Multi-core Cache

Coherency Now, in addition to maintaining coherency between memory and a single cache,

the cache coherency scheme must address simultaneous reads and writes to an

individual processor’s cache while maintaining coherency with the shared memory

as well with all the other cache memories that are used by the other processors.

The only efficient mechanism for doing this is by using cache coherency

hardware. Regardless of the hardware assist method used to maintain cache

coherency, using cache in multi-core systems tends to serialize process

execution. The question is to what degree. In later articles, we will explore

how different hardware architectures address this. Todd Brian is product marketing manager at

Accelerated Technology, a

Mentor Graphics division. Part Two of this

series will cover various aspects of multiprocessing from an RTOS perspective,

looking at two different approaches - symmetric (SMP) and asymmetric (AMP)

multiprocessing - their benefits and limitations in real time, deterministic

environments. To learn more about this topic, go to More

about multicores, multiprocessing and tools Copyright 2005 © CMP Media LLC

By Todd Brian, Embedded.com

O=2 9 2006 (9:00 AM)

URL: http://www.embedded.com/showArticle.jhtml?articleID=175802474

One answer to my question may lie in economic theory,

specifically Adam Smith’s “Wealth of Nations.” It opens with a dramatic

recommendation that rather than each worker performing all tasks required for

pin making: rolling the wire, cutting the wire into pieces, sharpening the wire

and bobbing the end, each worker should specialize in performing a single task.

One worker would roll the wire; another would sharpen the wire, another bob the

ends and so on.  good one for

manufacturers of cell phones and other electronic devices, which perform a

multitude of roles: the DSP efficiently performs signal-processing activities

and the ARM core performs the role of a general-purpose processor. (Panel to right)

good one for

manufacturers of cell phones and other electronic devices, which perform a

multitude of roles: the DSP efficiently performs signal-processing activities

and the ARM core performs the role of a general-purpose processor. (Panel to right)

What is

serial execution? Execution is considered serial when one or more cores in a

multi-core system are not executing code. This can occur due to a variety of

reasons. Sometimes it is because there is no reason for code to be executing on

multiple cores. Basically, the system is idle. From a performance perspective,

this is nothing to worry about. However, there are a number of situations where

it would be desirable for all of the processors to be executing code, but can’t

because of data or resource conflict, load-imbalance, synchronization or the

need to maintain cache coherency.

There are two general types of coding approaches that you

can take with multicore devices: fine-grained parallelism (in the case of the

connection machine and others that have hundreds or thousands of processors –

this is called massively parallel) and coarse-grained parallelism.

In the

context of parallel execution, synchronization overhead is the waiting and

communicating incurred by interdependent tasks waiting for other tasks to

intermediate results in order to begin execution again. Unlike waiting for a

Spinlock to release because of resource contention, synchronization overhead is

caused when a task cannot continue execution until an intermediate result is

provided by another task.

Fine-grained

applications typically take advantage of doing the same activity many, many

times in parallel. The example above used a loop unrolling approach.

Course-grained segmentation approaches parallelism at the task level, because it

is almost impossible to design and segment code in such a way that each task has

the same execution time. The different execution times lead to what is called

“load imbalance.”

Cache memory is used to speed execution of a microprocessor.

Cache memory is typically implemented as a relatively small amount of high-speed

memory. Unlike main memory, cache memory can satisfy memory reads and writes at

processor frequency.

Utilizing cache in a multi-core design takes the problem of

cache coherency to the next level. There are a number of multicore architectures

on the market now that utilize shared memory. These architectures also utilize

cache memory to speed execution.